I’ve recently (~6 weeks ago) replaced the very janky MPD server setup in my kitchen with a smaller Raspberry Pi 3B + DAC hat.

When I’ve attempted to run this setup in the past, it’s worked fine for maybe a week and then I’ve had network response issues – the CPU seems okay, but network connectivity is so bad, I can’t even SSH or send through an MPC stop command.

I originally figured that the issue here was weak WiFi on the Pi + weak WiFi access point and left it at that – since I seemed not to get the issue when I moved the pi into my office and connected via Ethernet.

However, I have a shiny new WiFi AP now, which has much better range and reliability, so I figured I’d give the Pi a go again – no fan, lower power usage, quiet.

Worked great! Until yesterday.

Yesterday we had a power outage. I still haven’t configured my media devices to retry NAS connections after power outages, so they often need to be re-rebooted when this happens so that they can access media libraries. This was the case with the Pi.

When it came back up, MPD was accessible but… slow again. And then songs would stutter out or controls would be unresponsive. SSH struggled to connect.

It was the old set of problems all over again.

But why?

I tried:

1) Completely unplugging the Pi and its PSU and leaving them before plugging back in – capacitors are sinister majicks, so maybe this was related to the power outage?

2) Updating the system

3) Disabling the GUI on startup (may have already been that way)

4) Checking for undervoltage messages in dmesg (none)

5) Checking wifi connectivity (iwconfig wlan0 – 64/70, no signal issues)

6) Swearing

None of these worked. Time for bed.

Upon investigating this morning, I had two “finds”, both from the mpd.log file:

*Lots of “alsa_output: Decoder is too slow; playing silence to avoid xrun”

*Lots of “zeroconf: No global port, disabling zeroconf”

Both these messages appeared AFTER the day of the outage but not before (except one zeroconf a few days earlier – but not 5 within a few minutes like I was receiving after)

I found a few threads similar to this one which suggested the zeroconf issue was systemd related – the “fix” suggested there did not help me.

(Also: I’d been using systemd since day one and not had this issue)

Searches relating to the “decoder too slow” message yielded little; my CPU was never the issue and my wifi connection was solid.

I tried changing the MPD config to include these settings for my output, per some suggestions:

buffer_time “200000”

period_time “5084”

This did nothing.

The zeroconf issue puzzled me; I don’t need or use it, but had never had these errors before. Couldn’t I just disable it? At least that’s one fewer error to confound me.

In the mpd.conf file, you can find a line enabling/disabling zeroconf:

zeroconf_enabled “no”

This… fixed it?

It doesn’t make a lot of sense.

My current hypothesis is that actually there is some deeper problem causing this issue and zeroconf failing was exacerbating it – my MPD client is still a bit sluggish, but not unresponsive like before.

I have no idea why this happened or if it is related to the power outage or if something updated and broke things. I’ll leave it for now and see how it goes.

Author Archives: Jonathan

Adventures in OPNsense

Our house received an upgrade to full fibre optic last year, and with the increase in available home bandwidth came the opportunity to retire our old NetComm router/modem/WiFi unit, which had been, if we’re generous, “adequate”.

Or, in fact, inadequate in a number of ways – its WiFi range was actually abysmal, something I only found out when I migrated to a dedicated WiFi unit and discovered that a single device can actually cover our entire property.

Also, it “supported” IPv6, in the sense that it could, technically, have an IPv6 address for a brief period of time, before just quietly giving up on routing IPv6 traffic until you either reboot or get exasperated and switch off IPv6 support.

So in the spirit of questionable decisions, and egged on by friends who are far more skilled and experienced in this sort of thing, I purchased a cheap all-in-one fanless PC with four 2.5Gb ethernet ports.

For the curious, it runs at around 55 degrees in the Australian summer, 65 if I make the CPU think hard. Certainly an improvement over the poor old Mac mini I have running as an MPD server in our kitchen, which routinely idles at 80 degrees.

Because I can’t ever be content to do things the easy way, I put Proxmox on it first, with the intention of running OPNsense as a VM, alongside a PiHole instance.

My initial set up experience was tainted by an inability to get OPNsense to register a connection via the WAN port, which resulted in around 45 minutes of frustration before realising that my ISP requires me to manually “kick” connections between router changes. As a result of this faffing about, I now at least have a document detailing exactly what each of the physical ethernet ports are called in Proxmox and what their MAC addresses are – probably something I should have sorted out before doing anything else anyway.

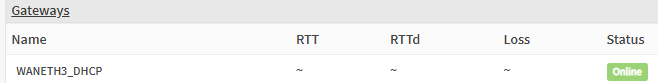

Once the router had a connection, everything ran great. For thirty minutes. After which, the router simply couldn’t talk to the gateway any more. No Internet connection.

In OPNsense’s dashboard, it indicated that EVERYTHING IS FINE. Which, you know, not true.

A reboot results in the same behaviour – everything is fine for around half an hour, then no WAN connectivity. Renewing the WAN connection fixes things… indefinitely. Until another reboot.

Thus began The Troubleshooting.

Various settings and configs were checked. IPv6 disabled (just in case), hardware VLAN filtering disabled etc etc

Same behaviour.

Install a completely fresh OPNSense VM – exact. same. behaviour.

And the whole time – once I renew the WAN connection, it fixes itself for as long as it remains up.

The command I ended up running in the shell:

configctl interface reconfigure wan

Magically fixed it… but only if it had already broken. That is, I couldn’t just run a renew at the end of the boot sequence and call it a day – I had to wait until the WAN connection failed before I could renew it – and that just didn’t fly (not that appending some magic words to a startup script would have made me happy).

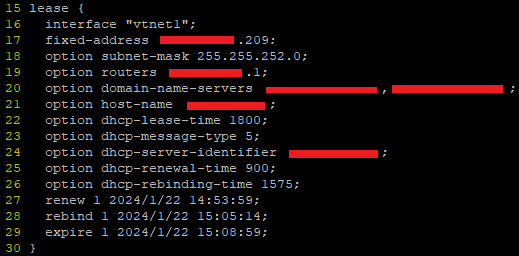

I ended up digging through the DHCP client leases file in /var/db (in my case dhclient.leases.vtnet1) and noticing some strange overlaps in the renewal/rebind/expiry etc times.

These leases files look like this:

Turns out, this a log, rather than a config – FreeBSD is writing the dates and times there as a record, not an instruction. But this was the clue to help figure it out – when router first booted, the lease file was written to but the time for renewal and expiry passed without the router even trying to renew the connection.

Once I reran the renewal command manually, it worked fine, but crucially, it was writing dates and times that were wildly different to the initial time on bootup.

Anyway, as with all things, it was DNS.

Well, sort of.

When I set up the initial Proxmox install, I somehow (?) managed to set its DNS server to be 127.0.0.1. Surely that can’t have been the default?

Anyway, that meant that while it could do all the work of creating VMs just fine, it couldn’t, among other things, talk to NTP servers to figure out the time.

But it did know it was in the UTC+8 timezone. And it did assume that the hardware clock was in UTC time. Which it wasn’t. It was set to local time. So my VM host believed it was 8 hours ahead of when it actually was.

When my router booted up, it was given this time and it then obtained a lease and made a note to renew that lease in 15 minutes. 30 minutes, tops.

But it then used an actual DNS server to do an NTP lookup for the correct time – at which point it said to itself, “hoo boy, I am 8 hours ahead of where I should be! Lemme just fix that right up.” But its lease now thinks the expiry is not for another 8 hours – hence failing to renew and not having any WAN connectivity.

To add to the confusion with all this – FreeBSD in all its wisdom writes dates and times to the lease file in universal coordinated time – not local time – without any indication that this is what it is doing. So when the router first obtained that lease and wrote the wrong time to the log, it looked to me like the correct time, but when I renewed the lease manually, it had corrected its own time and was now logging, what appeared to me to be 8 hours in the past.

Obviously, I think everything should always just be in UTC and that there are no problems or issues that would or could be caused by adopting a world free of timezones, but, please, indicate that somewhere, hey?

Anyway, OPNSense seems fine and good. I’ve managed to do some port forwarding and set up queues to minimise bufferbloat, so all is right with the world.

Now if I can just wrangle IPv6 DNS…

MPD Clients and Content Sorting

Perhaps you, like me, use an MPD server to manage and play a largish music collection. Perhaps, you, like me, are finding the odd weird thing happening in your client where multiple albums appear for the same set of tracks, or tracks are split between two different, yet somehow identical albums.

Probably not though. However, this post exists to provide me with a reminder of the techniques used to resolve those issues.

Before we begin, my primary client for this library is MALP on Android – it’s actually very good, but gets grumpy if your MPD is old (FWIW mine is 0.22 – I went to the trouble of compiling by hand) and is very strict about some tags (which is a Good Thing, but can be fiddly)

Step One – Basic Tag Hygiene

Make sure the tracks for your album all have ID3v2 tags (remove all the v1 tags – don’t need ’em) and that the track numbers, total tracks, artist, albumartist and album tags are all correctly set. Any variations here can cause issues where a track magically belongs to a different album with the same name, or lives on two different albums somehow

Step Two – Check for MusicBrainz Tags

The MusicBrainz project and its tagger – Picard – are wonderful. However, music releases are squirrely and it can be hard to pin down exactly where your tracks fit in the listed releases.

In an ideal world, you can just add the appropriate release on MusicBrainz and Picard will simply tag all your tracks correctly – done and dusted.

imdoingmypart.gif etc etc

But not all tracks belong on MusicBrainz. And not all albums exactly match the releases. If you’re me1, you have music that just isn’t on MB at all and never will be.

The issue comes from when some tracks in an album have been tagged previously by Picard, but others have not – because Picard leaves behind super secret custom tags to help organise music. Which is great, except that MPD and MALP can read these custom tags and if not all the tracks in an album having matching ones, weirdness ensues.

So I downloaded this thing, which does a very specific job, but I used it solely to view “extended tags” and delete all the MB related stuff for albums with a mixed tagging history.

Step Three – Disc numbers?

If some of your album’s tracks have been tagged “disc 1/1” or similar and some have nothing in that tag – these tracks will still appear on one album, but the order may be all wrong. Just make your tags consistent – either all disc 1/1 or no data in those tags.

Empty tags for this can be intepreted as “disc 0”, causing those tracks to be erroneously listed prior to others.

That’s all I have so far, I’ll update with screenshot examples later.

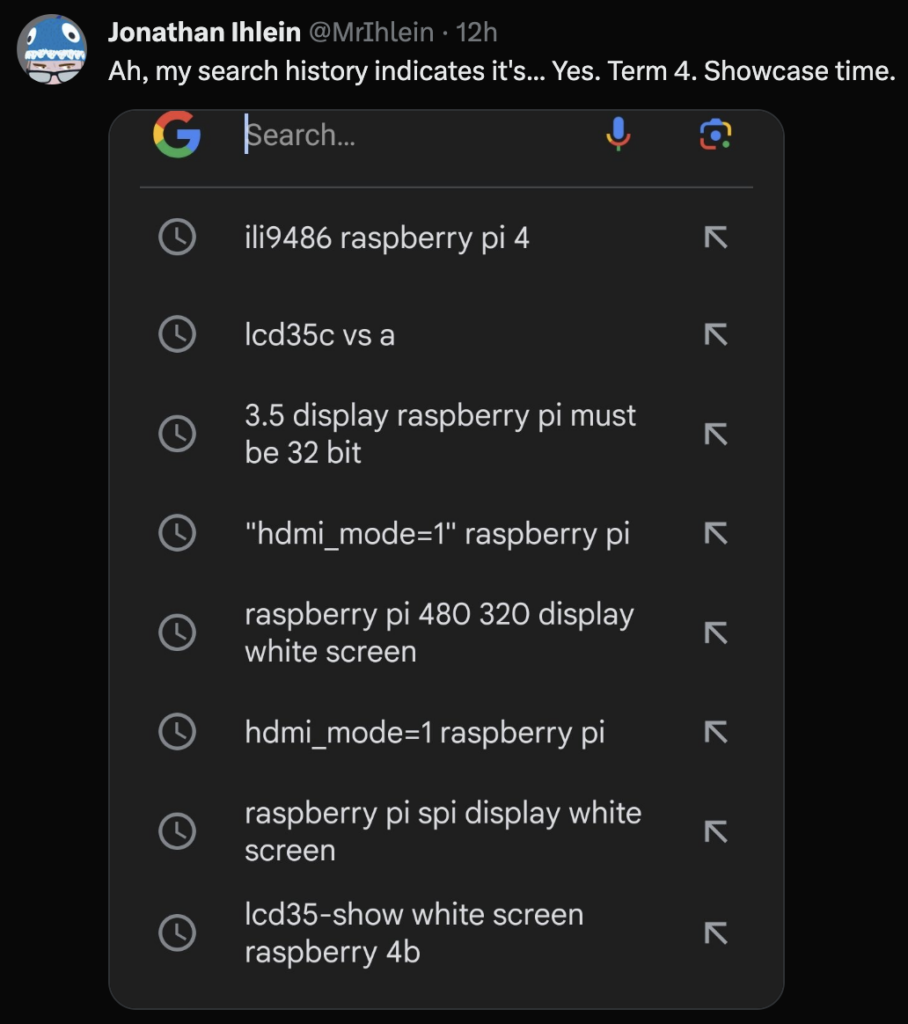

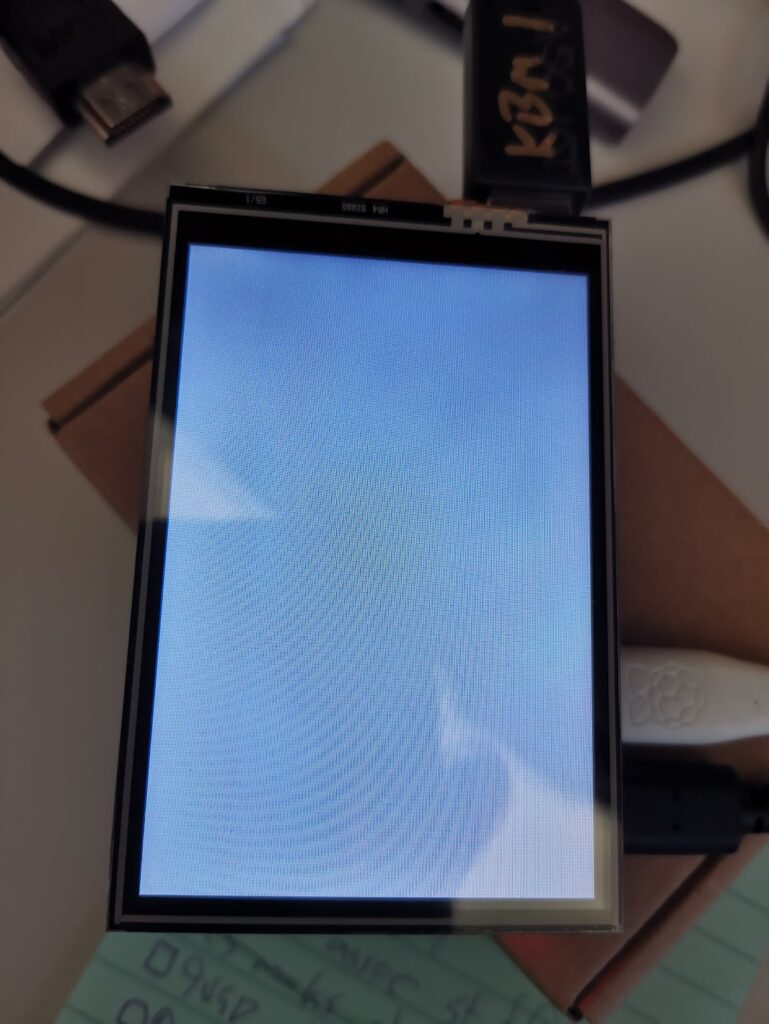

Raspberry Pi Screen Hats 2023

I’ve used little Raspberry Pi screen hats for various projects in the past – they are almost always a genuine pain to set up, especially if touch support needs to be calibrated, but they’re generally not more than a 30 minute job.

Except this year.

I had an old, but still on the market 3.5″ display that a student wanted to use for their project (lots of Pepper’s ghosts this year). It just would not behave with the latest Raspberry Pi OS running on a Pi 4B.

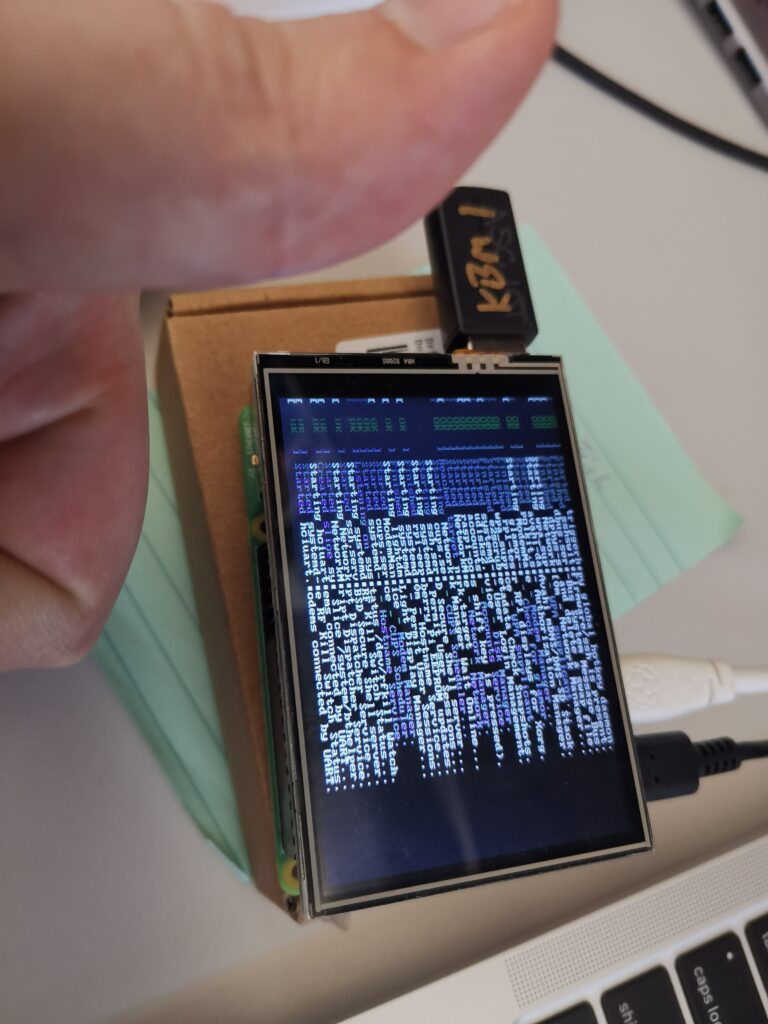

After trying the usual suspects changes: faffing about with various almost identical drivers from Waveshare and GoodTFT (I ended up deciding that our particular hardware is the knockoff one from GoodTFT, rather than the Waveshare original), tweaking various settings in /boot/config.txt and raspi-config, I stumbled upon this post.

Long story short, the old dtoverlay setting for these displays was this:

dtoverlay=tft35a:rotate=90

But that’s apparently not supported in the new Raspberry Pi OS – you need to use this:

dtoverlay=piscreen,drm

Next challenge: to get the desktop displaying. It’ll show me a terminal login (ctrl-alt-F2), but X will not start :-/

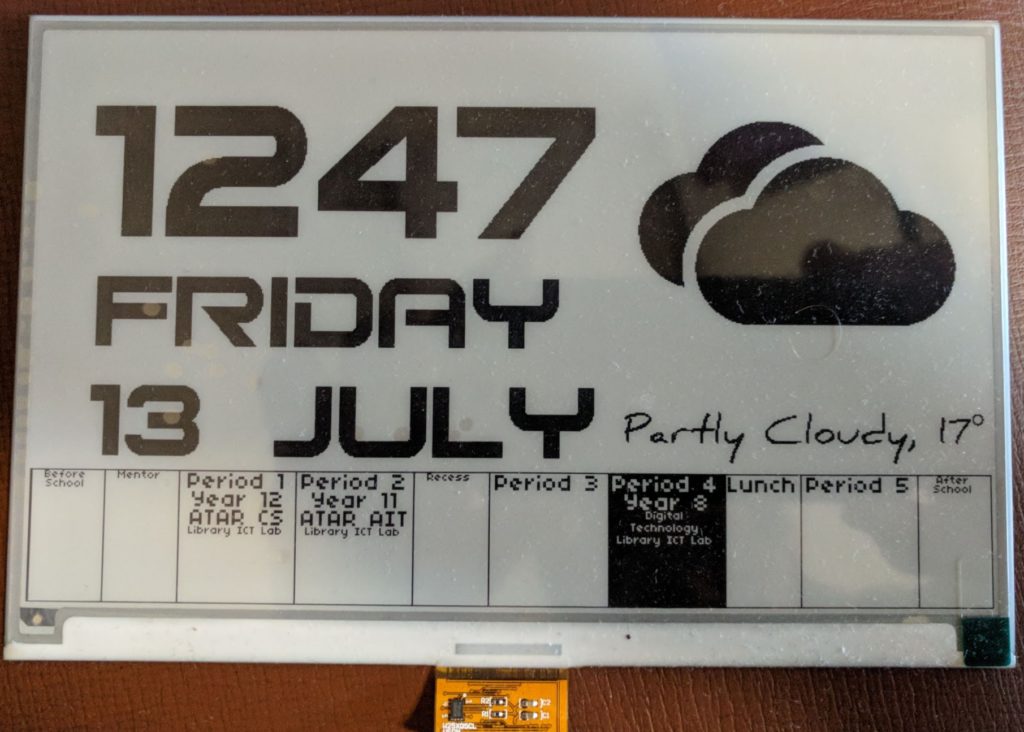

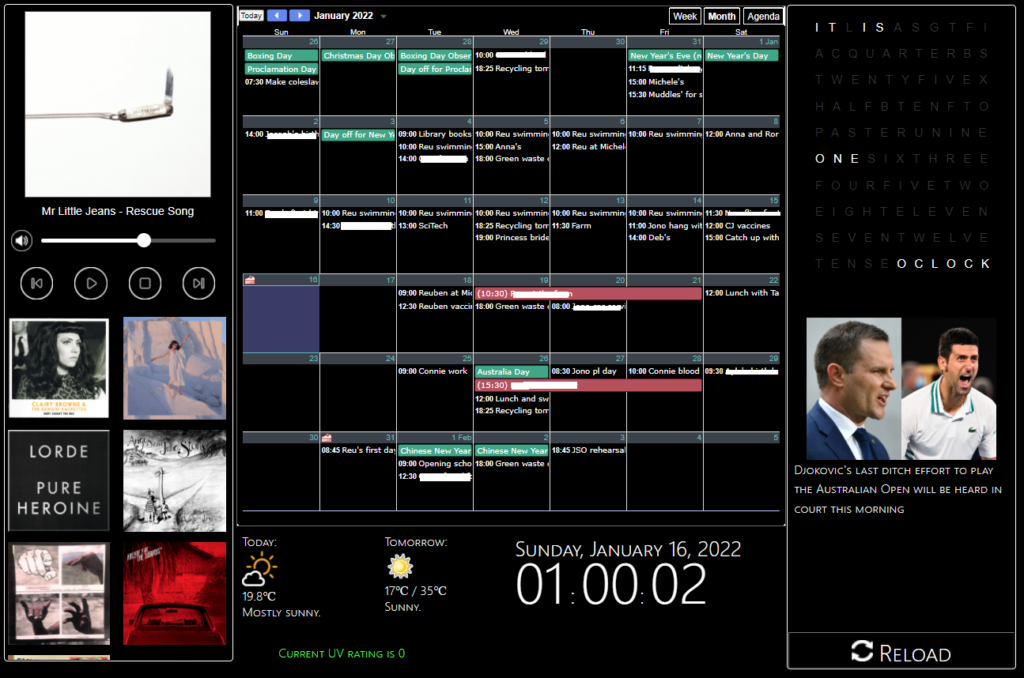

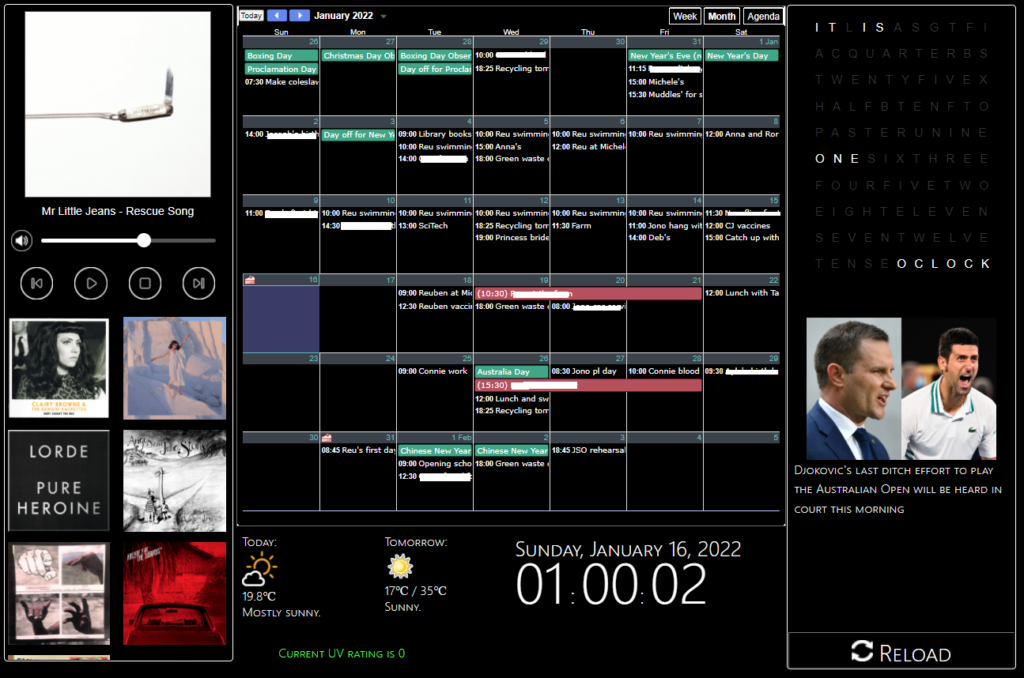

A Control Panel/Dashboard

Here was my vanity project this summer:

I’ve been meaning to do something like this for around a year – having an easy to view calendar not on our phones and controlling the MPD server above the kitchen were the main drivers.

I’m going to go through each component of the panel before giving an overview of how the whole thing hangs together, in an endeavour to kinda-sorta document the thing for when it inevitably breaks.

A Music Player (except not)

I tried a few music servers before just settling on a raw MPD install. I was using a Raspberry Pi 3, but it kept freaking out with maintaining WiFi (no idea why) so I ended up with the current server – Ubuntu running on an old Mac Mini via a USB external HDD (since the SATA controller died on the Mac).

I’m astonished this thing works at all, to be honest. Even acts as a bluetooth speaker when needed.

The issue with the MPD server was in operating it – the MALP app remote controller is fine (though quirky in its own way), but not super convenient or transparent to use when wandering around the kitchen. What I wanted was an easily accessible control to simply play/stop/next and display track details.

There are existing web applications I could have used for this job, but they were either too hard to configure right or hugely overkill for what I wanted. None of them quite fit right inside an iFrame either, which was frustrating.

It’s not possible to create a pure JavaScript controller for MPD – it doesn’t allow for WebSocket connections, therefore any web app solution would need a CGI backend. To whit, fine, I’ll roll my own.

What you see above is actually two iFrames – the top (player) section and the bottom (album select) section.

The top section can issue AJAX commands to the backend, written in Python using the surprisingly well-documented Python-MPD2 library.

This is all pretty straightforward, but for two things: keeping the current track data fresh and accessing album covers.

Keeping track data current doesn’t have an elegant solution – I have the JavaScript query the backend every 4 seconds and check for differences in the track name or album artwork file. It’s not fancy, but given I can’t access MPD directly via JS, keeping it constantly up-to-date is beyond my meagre ability.

MPD supposedly allows for access to binary data containing album covers, but the rules for this are opaque and inconsistent – I have a number of albums with corresponding images in the right directories, but no artwork shows up.

MALP works around this by (semi-successfully) pulling data from MusicBrainz where possible. So I did the same – an artwork backend informs the panel of the best image to use based on existing files and downloads the appropriate album cover where artwork doesn’t already exist.

This mostly works, but is hardly robust.

Album Selection

This is mostly smoke and mirrors – I lack the patience or inclination to build a system for gathering data on all the artists or albums in our collection. Instead, I figured we only have a handful of albums at any time we’re really listening to. I’ve created stored playlists for those using MALP and another backend script runs a cron job once a day to add any new playlists to the frontend.

What was most “fun” about this was of course passing the playlist names back and forth – they’re replete with lovely juicy characters such as apostrophes and ampersands which break URLs something fierce.

As a result, the JavaScript on the front end base64 encodes the playlist names before URI component encoding the base64 string and the Python backend undoes all that to load the appropriate list.

At this point I’m remembering why I never stuck with frontend development back when I worked as a dev.

A note about JQuery:

The music player is the only part of the project to use JQuery. I resolved not to use any frameworks where possible – mostly out of bloody-mindedness, but also because it wasn’t really essential for any of the panel components – but the exception was marquee.

Yes, that marquee.

You see, for long track names, they won’t fit in the 300 odd pixels set aside for the player. So for those tracks, I wanted the name to scroll when the track was playing.

To get a reliable marquee effect in this day and age, you apparently have to use a JQuery plugin and about 20 lines of CSS.

Calendar

One does not simply make a calendar in software. Time is evil when it comes to software development – I’m torn as to whether JavaScript or the entire concept of time itself is worse.

Okay, it’s JavaScript – I have to actually use JS more often.

Rather than reinvent the extremely complex and fraught wheel of time, we can just use Google Calendar’s “embed your calendar as an iFrame” feature. Perfect!

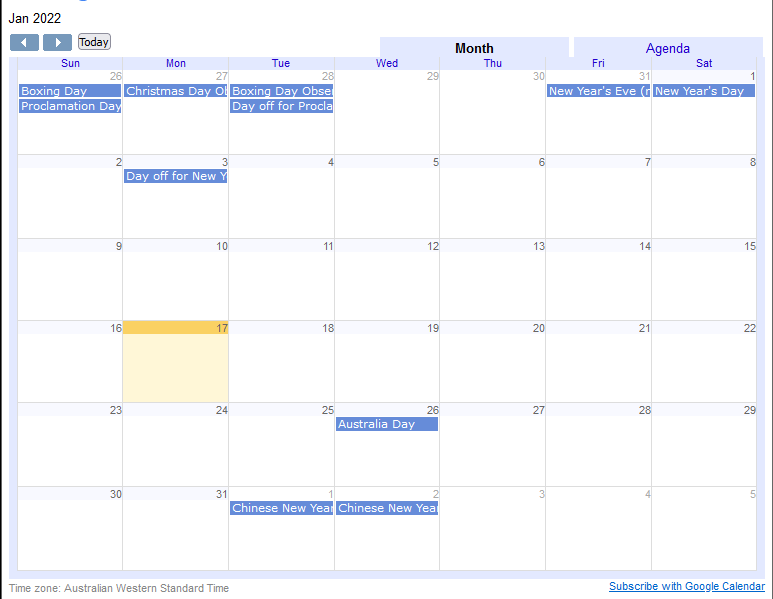

It looks like this:

Hmm. That doesn’t look like the rest of my panel.

A brief word about the panel’s colour scheme:

I’m not good at colour schemes. More than that, I struggle to care about them much. It’s not that I’m colour blind, I’m just largely colour agnostic. I mean, I’m not going around using hotdog stand theme or something, but beyond that, well, ¯\_(ツ)_/¯

Here’s the thing though – you can’t just restyle the contents of an iFrame when it comes from someone else’s server – there are very important security reasons for that. Given this was a project purely for use in my kitchen, on a device inaccessible to the outside world, I did look for workarounds to this security policy.

As a result, I found a lot of people discussing some super bad ideas for circumventing XSS protections, and there’s probably a fortune to be made in bug bounties if I looked up their Linkedin pages and did some half-hearted prodding on the web apps run by their companies.

So disabling XSS security is a non-starter.

Next best option: User Scripts!

You might know these as GreaseMonkey on Firefox or TamperMonkey on Chrome. They are excellent for bending the world of the web to your own twisted vision.

A basic userscript later and I’m forcing the browser to restyle the Google Calendar in a dark theme. It is absolutely, totally and in all other ways perfect, and I’ll not be moved on the issue.

Don’t click the “agenda” or “week” buttons.

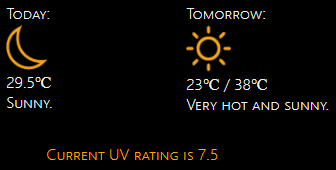

What’s the weather like? Nice.

(You’ll note the “sunny” icon in the screenshot at the top doesn’t match this one – Chrome aggressively caches images and it’s challenging to have it download a changed version)

This was a hot mess. So to speak.

There exist APIs for weather which are free. None of the ones I found were worth a damn for local weather – often off by 2-6 degrees (43 degree day? It happily reports that it’s 37!)

There exist APIs which are very expensive. This is not suitable for my flimsy summer panel.

Then… there’s *coughs* scraping weather websites.

I won’t go into detail, but to ensure that the scraping only happens a handful of times a day, I wrote a Python script with Beautiful soup to snag the precis for today and tomorrow along with the temperatures and dump them into text files on the webserver. Then, the weather app reads that data in every 15 minutes or so.

Weather icons are usually pretty awful. I like these ones.

UV was… trickier.

When my wife asked for UV info, I said, “Easy. It’s summer, therefore it’s always extreme. Pack sunscreen and wear a space suit.”

But no, the spousal request was for moment-to-moment UV rating values (or thereabouts).

The official UV rating site for Australia was last updated when Kings of Leon were a big deal on the radio.

I guess at least Kings of Leon released new material since then?

Some digging unearthed a JSON file shuttling from a server to a script on the page and then into some terrible graphing libraries. As well as many, many commented lines of code that were clearly not meant to make it into production.

I pointed some more Python at the JSON file and… oh great, it’s a giant array of UV data for every minute of the day. I’m going to have to iterate through the whole thing and… wait, there’s a property at the bottom of the file called “current_uv_rating”, perfect!

Nope. It’s always set to zero.

Iterating it is. Another cron job runs the Python every half hour.

Have I got news for you?

Getting ahold of news headlines was surprisingly challenging too – there isn’t an API for our nationally funded provider (er, that is, nationally funded provider not directly owned by our home grown Dark Lord).

They do offer RSS feeds (am I hearing decades old music again?) which they seem to have been very enthusiastic about around the time that everyone was done using RSS feeds. Most of the info on ABC’s site regarding RSS feeds is from circa 2011, and I’m cautiously using the word “most” as that implies there’s a lot more information than there actually is. Which is close to none.

There does exist a “just in” feed – an XML file with headlines, links and relevant images for the most recent stories, regardless of topic or popularity. I don’t know what dark science or eldritch divination led me to find it, because you sure as heck can’t track it down using either Google or the ABC’s own search functionality.

At any rate, another Python script + cron job (TM) later, and I’m slurping down headlines to display every 25 seconds on the panel.

Here’s The Thing, though: when you visit an article by smearing your finger on the screen, it opens in a new tab. Which would be dandy, except the only way to get back to the panel again is to:

- Press the Windows symbol on the Surface device running the panel (a feature sadly missing from more modern Surface Pros) to access the taskbar, given the panel’s browser is in full screen mode.

- Access the on screen keyboard from the taskbar (no, not the pretty one. The accessibility one with all the functionality).

- Press fn-F11 to drop the browser out of full screen mode.

- Close the tab. Oops, you missed the little “X” with your giant finger. Try again. No, that’s a new tab. There we go.

- Access the keyboard again. Press fn-F11 to go back to the full screen mode.

That dog won’t hunt, Monsignor.

I need a big, meaty, easily touchable “close” button on articles when they open.

iFrames are once again unsuitable for this job – I could just display the article in an iFrame with a button outside it – but ABC have (entirely reasonably) prohibited using their stuff in an iFrame, specifically to prevent nefarious purveyors of stolen bits from claiming their work as their own (not that I’m a purveyor of stolen bits. I don’t purvey them, thank you very much.).

Once again we turn to… Userscripts. Hooray.

The CMS used by our National Broadcaster is, like all CMSes, prone to creating multiple obscure classes in its HTML.

Therefore finding the right element to inject a button into was tricky.

It’s not robust by any means, but it currently finds the second link on the page (the ABC logo) and sticks a close button right next door. It then tracks down the “FixedHeader” data component in the page and injects another button – this is the overlay banner that sticks around at the top once you scroll down.

Et voila – one click return to the panel.

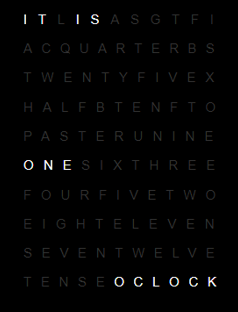

The #bestoftimes, the #worstoftimes

This is a Javascript clock. It is pretty silly. Maybe one day I’ll replace it with a graph of utilities usage or something. It’s a nice clock though.

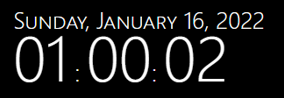

This is another clock. This one is more sensible and useful. I don’t know what it might do on Wednesdays in September since I didn’t use a fixed width font and it’s pretty close to the full width of its container.

I’ll be shocked if the panel is still fully working in September though.

How does this dang thing work?

It runs on an old Surface Pro, which is comically overpowered for the job it needs to do. That said, it is quite old and water damaged – part of the reason for the dark theme (apart from the fact that everyone’s doing it, it’s cool man, what are you, some kind of square?) is because water ingress damaged the screen some time ago, leaving weird blotches that are only visible when displaying bright images.

The panel itself is just some HTML with iFrames and a little JS to reload the calendar and weather. It’s actually not even hosted on the Surface, but instead sits on our media centre PC/NAS.

The media centre runs the necessary backend cron jobs and hosts the music, which is played on the third, far more interesting and terrifying PC, the MPD server.

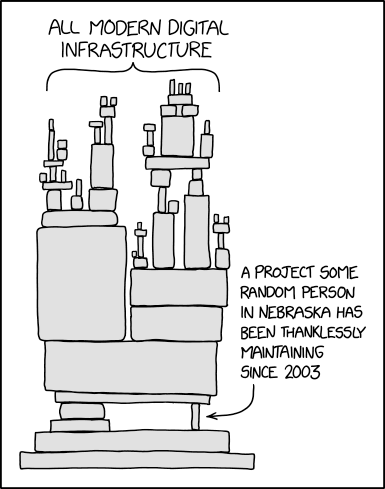

To sum up – this runs off three physical machines, 3 cron jobs, a locally hosted site, an MPD server, two userscripts which are dependant on their target sites “not changing too much”, various Python scripts for scraping sites (which also need to not change much, please and thank you) and a Python script parsing and processing an RSS feed that its owner no longer seems to care for.

The panel’s days are numbered – everything on the web is transient, even local pages which are only used in a single household.

This isn’t necessarily a bad thing – a bit over a year ago, we had a Google Nest we’d received for “free”. It was fine.

It played music – but not necessarily the music we wanted or in the order we wanted. And it had ads.

It could tell you the weather, but you had to ask.

It could tell you your schedule, sort of.

It could add to our shopping list – provided you used the shopping list page Google created, which has no API.

When it stopped working, I searched for a solution and found nothing useful. It was apparently a known flaw. Had we paid for it, we’d probably have some recourse for replacing it.

I didn’t feel the need to buy another.

At least when this panel breaks, I’ll be able to find out why and have a chance at fixing it.

Bon Voyage, little kitchen bench automaton!

Let’sEncrypt on Ubuntu Bionic

So I’ve been getting emails from Let’sEncrypt telling me that my certs are coming in through the old ACME v1 protocol and that if I wish to continue receiving certificates, I need to update my certbot.

I figured this was just because the Ubuntu version I had been running was a little on the old side and was no longer receiving non-security updates, prompting me to update to Bionic (v18).

But the emails kept coming, and it became apparent that the version of certbot I had was woefully out of date (0.31 vs 1.3.0).

Turns out, the certbot team doesn’t have anyone with expertise in packaging for Debian systems – so this has fallen by the wayside (even for their own PPA).

And yet… the EFF’s website containing guidance for getting certbot up and running on various systems still provides a guide for installing using the PPA. There is a justification for doing so, but as of June 2020, the software installed via PPA will be useless, so I’m not entirely sure why it’s still the recommended method 2 months out.

At any rate, here’s what I did to update mine in order to continue working with my hosting software:

wget https://dl.eff.org/certbot-auto

sudo mv certbot-auto /usr/local/bin/certbot-auto

sudo chown root /usr/local/bin/certbot-auto

sudo chmod 0755 /usr/local/bin/certbot-auto

/usr/local/bin/certbot-auto --help

(as per instructions from https://certbot.eff.org/docs/install.html#certbot-auto)

That ain’t all though. This worked fine on my main server, but the secondary server threw an error:

/usr/local/bin/certbot-auto --help

Requesting to rerun /usr/local/bin/certbot-auto with root privileges...

Creating virtual environment...

Traceback (most recent call last):

File "<stdin>", line 27, in <module>

File "<stdin>", line 19, in create_venv

File "/usr/lib/python2.7/subprocess.py", line 185, in check_call

retcode = call(*popenargs, **kwargs)

File "/usr/lib/python2.7/subprocess.py", line 172, in call

return Popen(*popenargs, **kwargs).wait()

File "/usr/lib/python2.7/subprocess.py", line 394, in __init__

errread, errwrite)

File "/usr/lib/python2.7/subprocess.py", line 1047, in _execute_child

raise child_exception

OSError: [Errno 2] No such file or directory

Turns out, that “no such file or directory” came from the fact I’d never used virtual environments on the secondary server.

Quickly fixed by installing the package:

sudo apt install virtualenv

I’m informed that on RedHat-based systems, you need the python3-virtualenv package, but that didn’t do anything for me.

And then, to maintain compatibility with calls for the old certbot and letsencrypt commands:

cd /usr/bin/

sudo ln -s /usr/local/bin/certbot-auto letsencrypt

sudo ln -s /usr/local/bin/certbot-auto certbot

Running certbot with the –version arg should then show you a current version.

I’m surprised at how little support the deb based systems are getting from the certbot crew – I’ve been otherwise impressed with Let’sEncrypt’s work thus far.

Using Arduino as a USB HID

I haven’t worked often with Arduinos or similar devices (Micro:bits notwithstanding) and the primary reasons are twofold:

- Residual trauma from having written code in C++ years ago

- Working in meatspace is inherently icky to me, as someone who has only dabbled comfortably with software in the past.

Software is often ineffable – particularly when you’re writing in unfamiliar languages and especially when you’re writing code where you have a slippery grasp on the mechanics1. This is exacerbated when the error could be in your software, how you transferred it to the device, in the wiring of the peripherals or in the peripherals themselves.

However, for certain projects, the need to work with physical components cannot be circumvented – and thus, the small necessity of creating an ultra simple USB keyboard, which is actually just a button connected to an Arduino.

We have Arduino UNO R3s coming out the walls here – they seem to have come with various kits over the years and so they’re in plentiful supply.

Fortunately, this guy did it already – seven years ago, no less – and it seems straightforward enough.

I will stress that I am a newcomer to the world of Arduino, so I may have the following things wrong, but as I understand it, the process works like this:

- The UNO has a processing chip that does the execution of a program. It can be programmed to, amongst other things, detect a button push and send a character code (or bunch of ’em) through the USB chip.

- The USB chip exists to provide a serial interface for transferring code to the UNO. Not for being a HID2. But – and props to Arduino for making this so easy – the firmware that runs that USB chip can be replaced, such that it behaves as a USB HID and not for transferring programs.

A Button for Vs

Largely arbitrarily, my connected program requires the user to press “V” in order to trigger a reset. There is no keyboard connected (and I don’t want there to be one). My UNO single button keyboard to the rescue.

Step one is to write the code and transfer it to the UNO. Mine is a little different to other examples I’ve seen:

//A Button for Vs - using external pullup resistor

int ledPin = 8; // My LED is connected to the pin labelled "8"

int state = 0; // Initialising the state to "not pushed"

uint8_t buf[8] = { 0 }; //A buffer array for our chars

#define PIN_BUTTON 2 //Button is connected to pin 2

void setup()

{

Serial.begin(115200); //The version of the USB firmware supports a baud of 115200

pinMode(ledPin, OUTPUT); // declare LED as output

pinMode(PIN_BUTTON, INPUT); // declare pushbutton as input

delay(200);

}

void loop()

{

state = digitalRead(PIN_BUTTON);

if (state == HIGH)

{ // check if the input is HIGH (button released)

digitalWrite(ledPin, LOW); // turn LED OFF

}

else

{

digitalWrite(ledPin, HIGH); // turn LED ON

buf[2] = 25; // Letter V

Serial.write(buf, 8); // Send keypress

releaseKey();

}

}

void releaseKey()

{

buf[0] = 0;

buf[2] = 0;

Serial.write(buf, 8); // Release key

delay(500); //This prevents a bunch of presses registering

}

First, there’s the presence of the LED – feel free to omit this. I added it when things weren’t going so well (read on) to provide some indication that the software was actually running.

Second, I’ve explicitly mentioned the use of an “external pullup resistor”. I note this, because, as a noobie to the field, I had no idea what the heck this was or even if I needed it. The reference code and circuit diagram ignored it completely (and worked, briefly). Some sites explicitly insist on a resistor being wired in (see below) and others talk about mysterious “internal pullups” and have slightly different code and diagrams without the resistor.

So what gives?

Reasonable explanations abound, if you go looking. In short: it’s not enough to just have a button/switch wired in that can be opened or closed, because meatspace sucks the wibbly wobbly nature of the real world means that all sorts of little voltages can register in that open circuit. This is called a “floating” state, and since it’s undefined, we need to nail it down, and we do that by creating a second part to the button circuit that is permanently connected (and thus permanently either HIGH or LOW, depending on how you connected things). When the button is pressed, it creates a new connection in that circuit, which results in the opposite state. All very predictable and much safer.

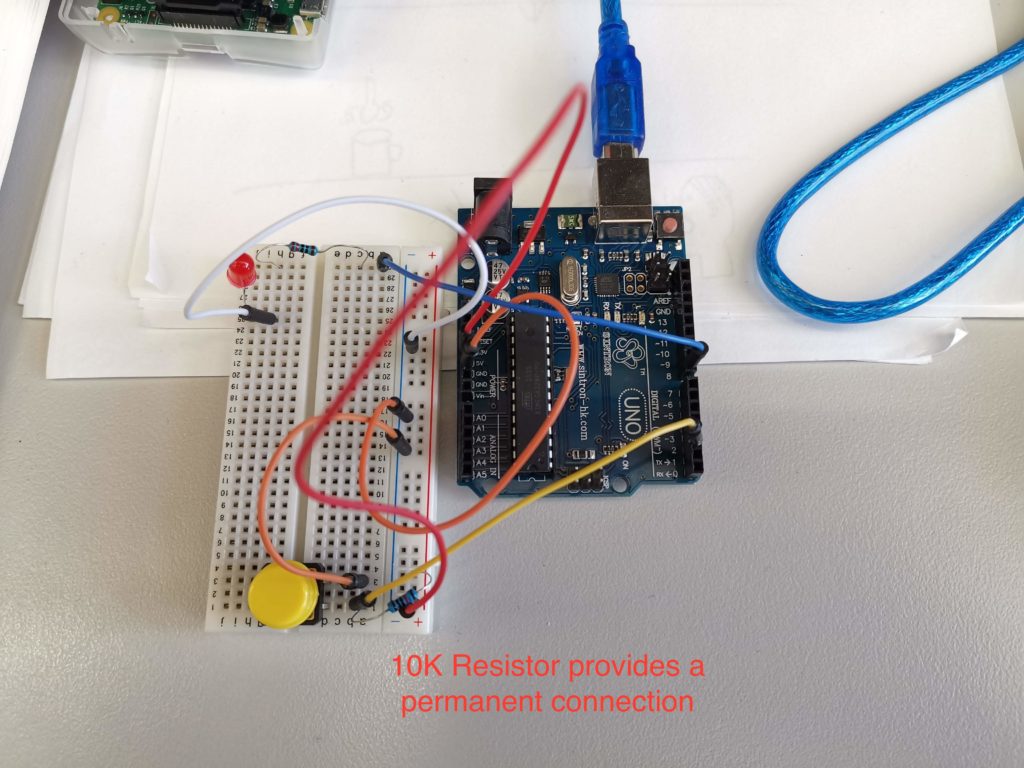

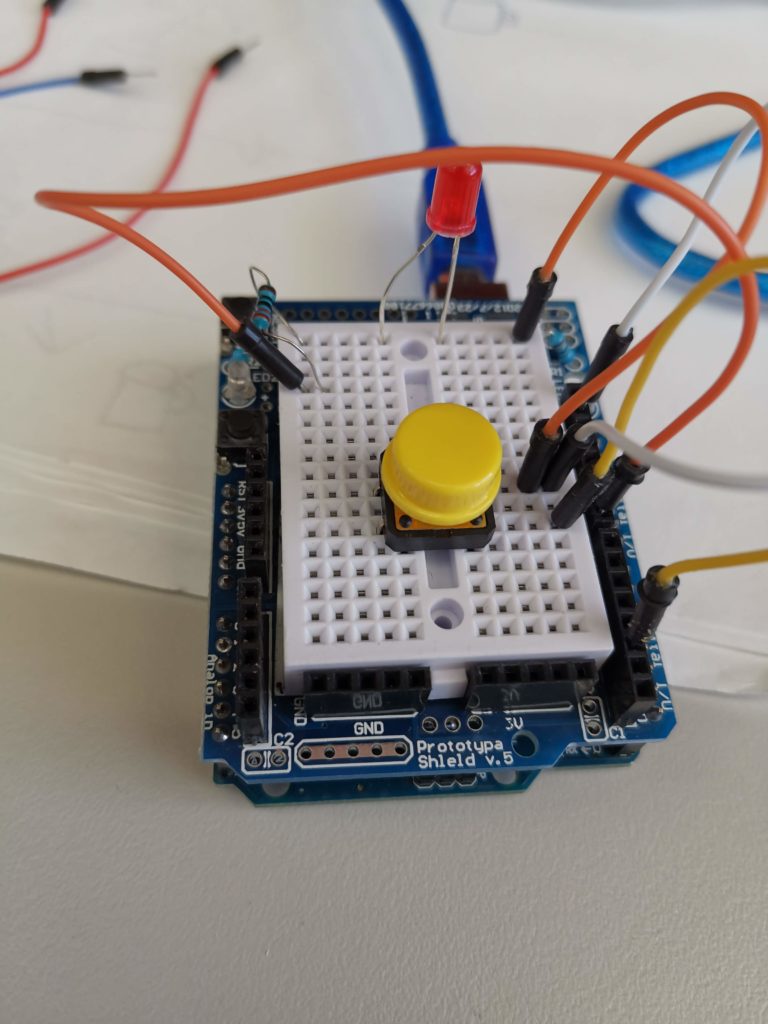

My setup using an external pullup is here:

But there’s a better way to go – the UNO does, in fact, have internal pullups. You can use these instead, by making small changes to the code, and results in cleaner wiring and fewer components.

Code:

int ledPin = 8;

int state = 0;

uint8_t buf[8] = { 0 };

#define PIN_BUTTON 2

void setup()

{

Serial.begin(115200);

pinMode(ledPin, OUTPUT);

pinMode(PIN_BUTTON, INPUT_PULLUP); // It's subtle, but that's the change

delay(200);

}

void loop()

{

state = digitalRead(PIN_BUTTON);

if (state == HIGH)

{

digitalWrite(ledPin, LOW);

}

else

{

digitalWrite(ledPin, HIGH);

buf[2] = 25;

Serial.write(buf, 8);

releaseKey();

}

}

void releaseKey()

{

buf[0] = 0;

buf[2] = 0;

Serial.write(buf, 8); // Release key

delay(500);

}

The difference is not super obvious, but it happens where you declare the pin mode for your buttons: pinMode(PIN_BUTTON, INPUT_PULLUP);3

Why did I faff about with resistors so much?

Because I did some silly things first.

Flashing Firmware – Even when it’s safe, it’s dangerous

Flashing the firmware on the UNO is surprisingly pain-free. You use a screwdriver to short the reset pins on the UNO, you get a program called “dfu-programmer” and point it at the appropriate firmware file and it’s done. More or less.

It was the “more or less” that got me.

You see, the process is a little more involved than that. First, you upload a program like the one above. Then you need to erase the existing firmware to make room for the new one. Then you upload the new one. Then you reset it using dfu-programmer.

sudo dfu-programmer atmega16u2 erase

sudo dfu-programmer atmega16u2 flash Arduino-keyboard_115200.hex

sudo dfu-programmer atmega16u2 reset

That’s three commands which are easy to mix up when you’re in a hurry.

They have to be done in the right order. And if you want to change the program that’s running on your new “keyboard” (for example, to alter the key or add new buttons, or add a longer delay) – you first have to flash it back to the original firmware to let it upload the program, then flash it again to the keyboard firmware to test it.

To make my life “easier”, I wrote two scripts to issue these commands. And because I am super clever, I chained the commands with the logical “and” – && – to ensure that the proceeding commands would only run if the previous command were successful.

My Mac took this to mean “run the commands in any order”4. I spent an hour or so trying to get my formerly working keyboard to do anything, before realising that it was flashing the firmware and then cheerfully erasing the firmware it had just flashed.

Sigh.

The moral here is to run the commands manually, or at the very least read the output which will tell you exactly what it is doing.

Addendum: HID numeric codes for keyboard presses can be found here.

Headless Raspberry Pi – Circa 2019

With the advent of the Raspberry Pi 3 and Zero W, newer Pi-s come with wireless baked-in, which is (IMHO) a welcome addition to help make setting up a Pi without Ethernet much more straightforward.

In fact, given my employer’s aggressively antisocial wireless network 1, it has become the norm for me (and my students) to set up a fresh Raspbian install using either a mobile hotspot or by tethering their phones. In neither case is Ethernet a useful option2.

“Headless” installs are setups which do not require a keyboard, mouse and monitor – given the ubiquity of networking and the low-power of Pi-like devices, it makes sense to be able to use an SSH session to do all your setup and get your device running without the hassle of directly using I/O in front of it. Plus, all the reference sites you’re using are probably open in the hundred or so tabs on your main computer3.

Without further rambling, here is the current easiest way to set up a Raspbian Buster install to be headless, using MacOS as the host machine:

- Write your image to a micro SD card. I am lazy and use Balena Etcher rather than DD, although at the time of writing, it’s a little broken when used with MacOS Catalina.

- Remove your SD card and pop it back in. Do not boot your Pi with it at this stage. You need to make these changes for the first boot or Raspbian will ignore them.

- It should appear mounted as “boot”. This is the only section of the new filesystem you can read and write on the host machine.

- Open a terminal, because we’re all adults here and GUIs are only for circumventing the DD command using Balena Etcher.

- Change directory to the boot partition on the card and create a file called “ssh”:

cd /Volumes/boot

touch ssh

- edit a new file called “wpa_supplicant.conf”4

nano wpa_supplicant.conf

Put this content in it, replacing the placeholders in quotes (but keep the quotes) with bits relevant to you:

update_config=1

country=AU

ctrl_interface=/var/run/wpa_supplicant

network={

scan_ssid=1

ssid="Your Network Name"

psk="Your Network Password"

}

That’s it – you can eject your card safely5, pop it in your Pi and power up.

Some additional notes, for fun and profit:

Q. How do I find the IP of my Pi after it boots, so that I can SSH in?

A. If you’re using an Android phone to hotspot – you can find a list of connected devices in the settings along with each of their IPs.

If you’re using a mobile hotspot or home router, log in to its web interface to view connected devices or get your phone to connect to the network use a network scanning tool such as Fing.

If you’re using an iPhone to hotspot – umm. I don’t know. Last I checked, they tell you how many devices are connected, but not any details about them (thanks Apple! I hate it!) and Fing doesn’t return details when it’s run on the hotspot itself. Arp has decidedly mixed results. There is apparently an app that can be downloaded to show you details of devices connected to your iPhone.

Q. Isn’t there other info I should include in my wpa_supplicant config? Like the country code?

A. Probably. It works fine for me without country code and I’m all for minimising the content that has to be customised in a config. Perhaps AU and UK wireless devices just interconnect fine, or perhaps some other WiFi voodoo has done away with the need for CCs. If you’re in the US, does it not work without a country code? I do know that in a previous version of Raspbian (Jessie, perhaps?), the Pi would refuse to connect if CC wasn’t set, so do with that what you will.

UPDATE IN 2020:

You absolutely do need to set the country code, especially if you’re using the 5GHz bands. Recent RPi OS builds seem to enforce this again. I’ve included the AU code in the example above (since I’m in AU), but you’ll need to set yours as per this list.

Q. I have to put my password in a config in plain text. What gives?

A. You don’t have to. There are ways to hash it and store it in the config.

Here’s the thing though – I’m betting this WiFi password is either a home network or a hotspot – and in either case, it’s a shared key in the literal sense of the term – lots of people know it, and it’s trivially easy to change it (at least on the router).

If you’re setting this up on a corporate network, my little config above won’t get you connected anyway. I’ve made it work in the past, but mobile devices I’ve connected to our corporate network have been… idiosyncratic. They lose Internet access or randomly change IP or need to be power cycled with a 15 minute delay every day. In short, I haven’t found Raspbian, or even many Linux distros that are cooperative with (what is probably a poorly configured) corporate WiFi, so in this day and age, it’s easier just to work around it rather than try to join it. :-/

Micro:bit as a game controller

The Goal

My aim was originally to set up a Micro:bit as a Bluetooth HID1, but it turns out that’s beyond my ken.

My initial attempts were using C++ or PXT as per these projects:

PXT Bluetooth Keyboard

BLE HID Keyboard Stream Demo

The first indicates that it will sync with MacOS and work with Android. I could only get a brief sync with MacOS sometimes and while it synced with Android, I couldn’t get any keystrokes to show up.

The second briefly showed up as a possible pairing device, but beyond that I got no joy. It doesn’t help that I couldn’t understand just about any of the code.

Revising Expectations

Okay, this is beyond my abilities at the moment. But there’s a cheaty way – based on the concepts used in this project.

Sam El-Husseini’s project uses three easy to implement components:

- Pushing data to the serial bus (USB) from the Micro:bit when a button is pressed

- A listener program on the host device that turns the data from serial into actions on the host

- A second/more Micro:bits that send Bluetooth messages to the first device – in this way, it would act as a proxy (basically a proprietary dongle).

Component 3 is compelling, because implementing Bluetooth communication between Micro:bits is almost comically easy in the interpreted languages (such as Python, Javascript etc). I imagine its probably significantly easier to implement in C++ than proper BT pairing too, but I’ll cross that bridge when I’m good and ready.

Part 1 – Proof-of-Concept Tethered Device

I decided to implement the first two components and leave the third for another time 2.

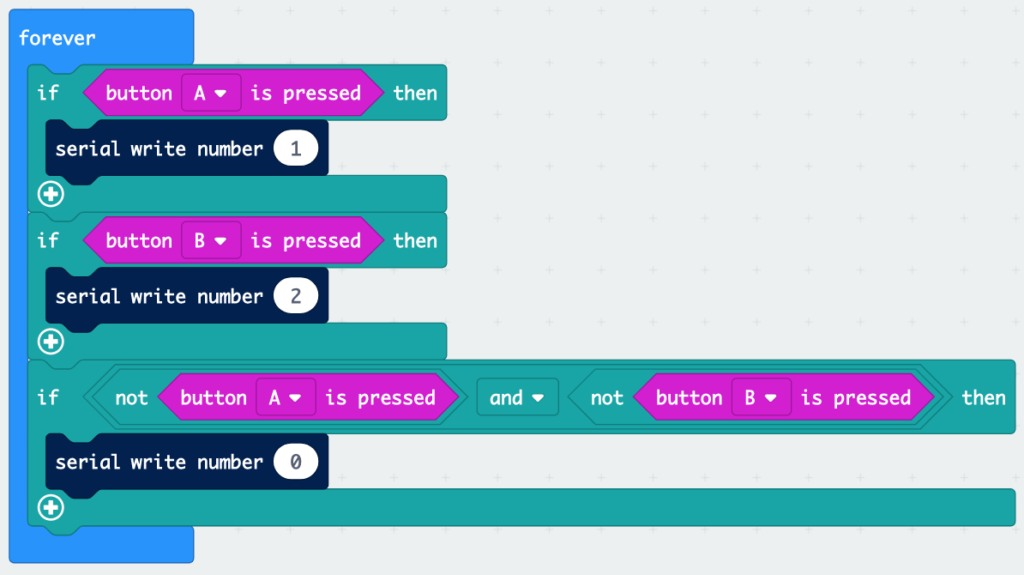

The tethered MB uses very similar code to Sam’s project above:

basic.forever(function () {

if (input.buttonIsPressed(Button.A)) {

serial.writeNumber(1)

}

if (input.buttonIsPressed(Button.B)) {

serial.writeNumber(2)

}

if (!(input.buttonIsPressed(Button.A)) && !(input.buttonIsPressed(Button.B))) {

serial.writeNumber(0)

}

})

The only modification here is to send a stream of numbers rather than linefeed delimited strings3.

The third if statement is to work with the key events that the host program needs to implement – if we’re just typing, sending the keystrokes in isolation is fine, but we need to indicate when a key is pressed and when it should be released. The 1s and 2s indicate when A and B are pressed respectively. The 0s indicate that nothing is being pressed. Is it bad and naughty that I’m sending a constant stream of zeros when nothing is happening? I’ll fix it in the first beta/third production patch.

I’ve never used Node before, so I had a go at using Node as per Sam’s project. It works great for his purposes because there’s a specific Node module for integration with Spotify. I needed something more general-purpose. The only two modules I could find were a keyboard simulator that is a wrapper for a JAR file and requires full-blown Java VM and robot JS which is also pretty big for my needs, but hey, let’s give the robot a go.

The issue with robot is that it implements a function called “keyTap”, which, well, taps a key. If you’ve ever watched someone use a controller, they ain’t sittin’ there tapping the buttons, they’re pressing and holding most of the time.

No dice. Back to Python.

Part 2 – Host Program

import serial

from pynput.keyboard import Key, Controller

ser = serial.Serial('/dev/tty.usbmodem14202', 115200)

keyboard = Controller()

last = 0

while 1:

serial_line = int(ser.read())

if serial_line != 0:

last = 1

if serial_line == 1:

keyboard.press('a')

elif serial_line == 2:

keyboard.press('d')

elif last != 0:

keyboard.release('a')

keyboard.release('d')

last = 0

Python provides two modules for our purposes: serial and pynput.

Pynput gives us much finer control over keyboard simulation – pressing and holding and releasing keys, among other functions.

There’s really not a lot to the host program – it’s surprisingly simple. The one point to note is the use of the “last” variable.

During testing, I noticed that the host program was blocking normal keyboard input – this is because the constant stream of 0s was causing it to continuously trigger key releases for the related keys, rendering the keyboard useless for those keys. The use of a test for whether or not the release command has been sent removes that unintended side effect. I investigated putting similar code into the Micro:bit program, but the nature of the byte stream meant that the 0s didn’t always register with the host program.

Any necessary improvements are indicated in the todo list below.

Wait – what’s the point?

“But Jonathan,” you say, “What’s the point of a controller with only two buttons? Even Tetris requires at least four!”

Ah, you’re forgetting about… Tron:

Armagetron Advanced, to be exact. Played with just two buttons (unless you’re a coward who uses the brakes).

In all seriousness, the proof of concept is for a more ambitious project: Micro:bits can be easily connected to a breakout board which allows for a wide array of inputs, buttons etc to be connected to its IO pins.

In theory (and what I’d like to do with my video game design class) one could design the composition and layout of their own “ideal” controller and create a Micro:bit program to pipe commands to serial.

Laser cutting or 3D printing the necessary structure of the controller should be straightforward enough (we’re not shooting for aesthetic design awards). The end result is a custom controller powered by any Micro:bit.

Now that this proof of concept is complete, there’s a little more work to be done.

Todo List

- Test with compiled, rather than translated code on both the host and Micro:bit. It could have been my imagination, but it felt that the controller was a touch delayed, which would make sense given the pipeline from physical button to simulated keypress.

- Test with two Micro:bits – using the tethered MB as a dongle for the “wireless” one.

- Connect external physical buttons and joysticks to the MB IO pins. This process is well documented and I do not anticipate it to be particularly difficult4

- Investigate removing or mitigating the zero stream when the device is idle.

- Investigate using Bluetooth to connect as a direct serial device without pairing – no idea if possible or easy, but would allow a similar serial streaming process with the need for a dongle MB.

- Modify the host program to autodetect the appropriate port.

- Verify cross-platform compatibility (read: Windows).

- Modify host program to allow for a button to be held down while tapping another.

- Design and implement a physical casing for a customised controller.

Definitely a job to finish in 2019, but happy to have found this process so easy to do (in comparison to native Bluetooth pairing).

Paint IP on Epaper: RPi Startup

I’m currently playing around with one of these E-paper modules:

The panel is great for its price and ease of use – Waveshare ship this model with a Raspberry Pi hat and provide a number of software libraries for interacting with them.

I’ll follow this up with a breakdown on how I created my wall clock, but just quickly, a quibble with Raspberry Pi/headless systems in general:

My place of work has a… restrictive network when it comes to BYOD. It’s better than it used to be, but unless you can easily connect to an enterprise wifi network (difficult, but not impossible with the Pi) you’ll need to connect an ethernet cable (and not mention it to the techs). In either case, you’ll get an IP address. But you won’t be able to figure out what IP address without plugging in a display.

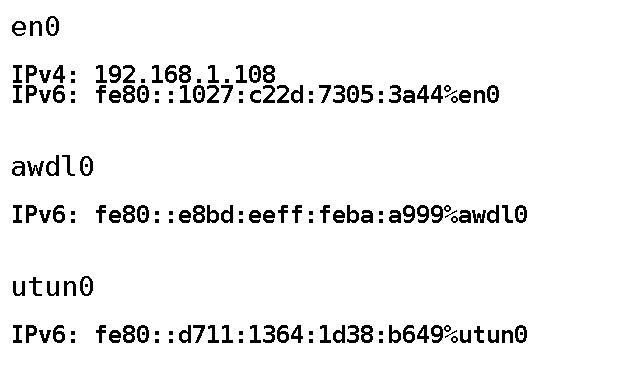

There is an opportunity with a display like this to work around this problem: run a program on start up to push the IP addresses of all network interfaces to the panel.

Without further ado, PaintIP, a small Python script that will get a list of IPs for your interfaces and paint them to the panel:

The “screenshot” pictured here is actually the image generated by the program which is then pushed to the panel.

It’s a minor thing, but sometimes the ability to bring your device in from home, plug it in and immediately know which IP to SSH to is a lifesaver.

Rejected (and hilarious) alternative:

A program which simply takes a screenshot of the primary console, munges it into the right resolution at 1 bit colour and paints the display every 6 seconds (why 6 seconds? because this particular panel has a 6 second refresh – not ideal, but mostly workable).

I actually made this abomination, based on a technique from this here blog post.

#!/bin/sh snapscreenshot -c1 -x1 -12 > console.tga convert console.tga -depth 1 -colors 2 -colorspace gray -resize 640 -negate -gravity center -extent 640x384 -sharpen 0x3.5 BMP3:console_bw.bmp python curator.py console_bw.bmp

It literally just uses snapscreenshot and ImageMagick’s convert program to create the 1 bit image of appropriate resolution and then calls a Python script to display said image.

Could I have put all this in a single Python script? Probably, but it’d have system calls to run snapscreenshot and convert (Python’s ImageMagick libraries leave much to be desired) and honestly I just needed the thing working now.

Curator is a very basic script that takes an image as an argument and displays it on the E-Paper panel – barely a modification of Waveshare’s example script.

Still, there might be the odd time when it’ll be useful to see a shot of the console for debug purposes – nothing stopping someone from configuring a hardware button to run the console dump shellscript.

Oh, and there are a handful of ways to get a program running on startup with a Pi, but the one I ended up using is simply inserting it into rc.local.