If you’re stuck behind a school or university firewall, you’ll often find that they’re unreasonably restrictive (as a user – as an administrator, well actually, most of the admins probably think it’s a bit over the top too, given it really doesn’t stop much untoward behaviour for the inconvenience caused).

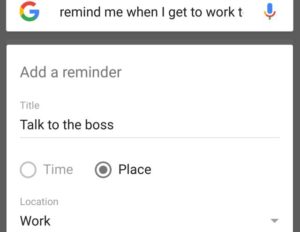

As long as you want web traffic to sites that haven’t been blacklisted or have restricted keywords in the URL (sigh), you’ll be fine. But if, for example, you need SSH access to a *nix server offsite, you’re stuck using various web based SSH console solutions.

As always, there are a variety of ways around it: some more complex than others. But a good place to start is the fact that most corporate firewalls are not only unreasonably restrictive – they’re also lazy.

Port 443 is used for secure web traffic, and the firewall can’t really do much to inspect the back-and-forth through that port (you know, by design), so in many cases, they just let traffic through without even bothering to check that it’s actually HTTPS.

I mean, really. If someone is trying to get access through port 22, they can probably figure out how to achieve the same end through 443 (this post, case-in-point).

Enter the demultiplexers – software tools to simply listen on 443 and direct SSH traffic to sshd and HTTPS traffic to httpd (the two kinds of traffic are trivially and flawlessly distinguishable).

Continue Reading…